following PLR Dev Roadmap 2025 - Q1 & Q2 - #2 by koeng

This post is to brainstorm about the plr server.

The main way to interact with PLR is through the Python interface. There is a tiny server in plr.server, but it’s underdeveloped.

It would be cool if the server has an api like this:

/<module>/<device_id>/<command>

eg

/lh/0/aspirate

Soon I will create a registry where every frontend class registers and deregisters on setup/stop (for a separate feature).

For frontends, it should be possible to create a dynamic server that creates an endpoint for every method, accepting the kwargs in json (for Resources we might need to use their name rather than a full serialized object).

There should also be an api for (un)assigning resources.

clients

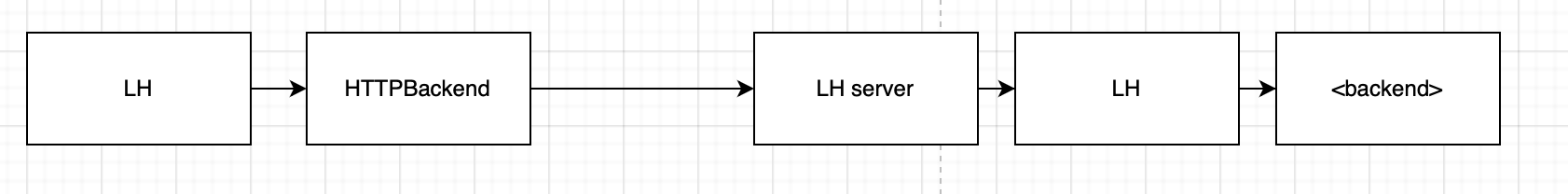

The interface to the plr server can obviously be any client that speaks http. One interesting way, which partially exists right now, is through the PLR HTTPBackend.

Here, users just use PLR like they are used to. Under the hood though, commands are sent to a server rather than the machine directly. On the server there’s a second lh instance (on the server side) that gets all commands and sends it to a backend of choice. Theoretically, it would be possible to chain this, but the backend on the server will probably be one connected to an actual machine.